This year's Summer School at CISPA- Helmholtz Center for Information Security will be supported by ELLIS and ELSA.

When: August 4-8, 2025

Where: CISPA - Helmholtz Center for Information Security, Saarbruecken, Germany.

We are inviting applications from graduate students and researchers in the areas of Computer Science and Cybersecurity with a focus on AI. During our annual scientific event, students will have the opportunity to follow one week of scientific talks and workshops, present their own work during poster sessions and discuss relevant topics with fellow researchers and expert speakers. The program will be complemented by social activities.

Application Process: Online applications are closed.

Notification of Acceptance: Several rounds of acceptance, roughly 3 weeks after application at the latest.

Fee: 200,-€ (includes full program, food and beverages during the week, weekly local bus ticket, and social activities)

Deadline for Regular Application: June 30, 2025.

Deadline for Late Application (waitlist): July 15, 2025.

Travel Grant: We are offering travel grants for students who participate actively in the whole event and present their work during a poster session. With your application, you can apply for a grant for accommodation, train/plane tickets and locl transport (actual travel cost spent, economy flight, train ticket 2nd class). Please do not book any travel arrangements before you have been selected by our jury and accepted to our Summer School. After acceptance, please send your travel arrangements to cysec-lab@cispa.de for approval prior to booking anything. We will confirm if/that your expenses will be covered and you will be reimbursed after completing the Summer School successfully.

Presentations: There will be several sessions, during which participants can present their own work / a scientific poster. The presentation is not mandatory to receive a certificate of attendance, but we are highly encouraging you to contribute your work to this session and as it will provide you with valuable feedback from an expert audience and might kindle interesting discussions.

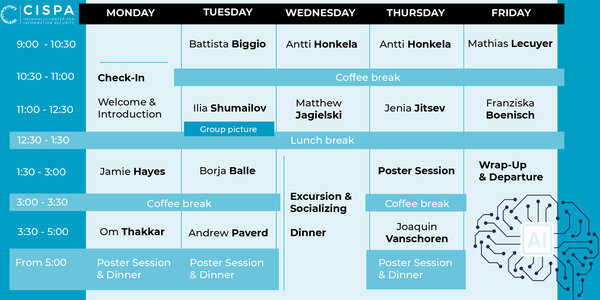

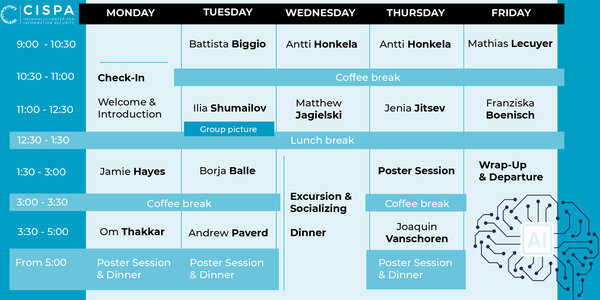

Summer School Program

Check-In for Summer School on Monday is between 10 and 11am.

The Summer School will kick off Monday morning around 11am with a Welcome Session and the first talks. Tuesday - Thursday will be full-day programs from 9 am to the evening (ending with socializing at dinner). There will be 2 coffee breaks and a lunch and dinner break daily. There will be roughly 4 content sessions + poster sessions during dinner per day. Wednesday afternoon, we will have a bus excursion involving some local sightseeing, a walk and a joint dinner. The school will end Friday early afternoon between 1:30-2pm, so you can start your trip back home.

Invited Speakers

Andrew Paverd (Microsoft)

- Title: Lessons leaned from two years of generative AI vulnerability response

- Abstract: In August 2023, the Microsoft Security Response Center (MSRC) published its first official Vulnerability Severity Classification for AI Systems (https://aka.ms/aibugbar) and established a specialized team to respond to vulnerabilities in Microsoft's generative AI systems. Drawing from our experience over the past two years, this talk presents several "lessons learned" from a generative AI vulnerability response team, ranging from how to define the vulnerabilities themselves, through to designing new evaluation platforms and mitigations for the most pressing threats.

- Bio: Andrew Paverd (https://ajpaverd.org) is a Principal Research Manager in the Microsoft Security Response Center (MSRC), based in Cambridge, UK. In addition to responding to reported security and privacy vulnerabilities in Microsoft's AI systems, he and his team research new ways to build AI systems that are secure and trustworthy by design. His research interests also include web and systems security. He is currently serving as an Associate Chair for the IEEE Symposium on Security & Privacy. Prior to joining Microsoft, he was a Fulbright Cyber Security Scholar at the University of California, Irvine, and a Research Fellow in the Secure Systems Group at Aalto University. He received his DPhil from the University of Oxford in 2016.

Antti Honkela (University of Helsinki)

- Title 1: Introduction to Differential Privacy

- Abstract 1: Differential privacy (DP) is the gold standard solution for privacy-preserving machine learning. In this talk, I will present a modern introduction to DP, building on bounding the ability of an adversary to distinguish data of a single individual, as defined by f-DP. I will cover standard definitions of pure and approximate DP as well as basic mechanisms (Laplace, Gaussian) that have more informative interpretations via f-DP.

- Title 2: Differentially Private Deep Learning

- Abstract 2: Differential privacy (DP) can ensure that deep learning models do not memorise and leak their training data. DP fine-tuning of pre-trained models has recently made this practical even under strong privacy of the fine-tuning data with high utility. My talk will provide a broad overview of DP deep learning, including optimisation algorithms, privacy accounting, hyperparameter choice and optimisation, different fine-tuning strategies as well as privacy auditing.

- Bio: Antti Honkela is Professor of Machine Learning and AI at the University of Helsinki, Finland. He leads privacy research areas at the Finnish Center for Artificial Intelligence (FCAI) and the European Lighthouse in Secure and Safe AI (ELSA). He serves in multiple advisory positions for the Finnish government in privacy of health data. Prof. Honkela's research interests center around privacy of machine learning, including differential privacy and privacy attacks, both in deep learning and Bayesian machine learning, as well as their applications especially in computational biology and health. Prof. Honkela regularly serves as an area chair for leading machine learning conferences, including as a senior area chair at NeurIPS 2025.

Battista Biggio (University of Cagliari/Pluribus One)

- Title: Machine Learning Security in the Age of Foundation Models

- Abstract: In this talk, I will briefly review some recent advancements in machine learning security with a critical focus on the main factors that are hindering progress in this field. These include the lack of an underlying, systematic, and scalable framework to properly evaluate machine-learning models under adversarial and out-of-distribution scenarios, along with suitable tools for easing their debugging. The latter may be helpful in unveiling flaws in the evaluation process, as well as the presence of potential dataset biases and spurious features learned during training. I will finally report concrete examples of what our laboratory has been recently working on to enable a first step towards overcoming these limitations, in the context of malware detection and web security, as well as in the context of large language and multimodal models.

- Bio: Battista Biggio (MSc 2006, PhD 2010) is Full Professor of Machine Learning at the University of Cagliari, Italy, and research co-director of AI Security at the sAIfer lab (www.saiferlab.ai). He has provided pioneering contributions in machine-learning security, for which we received the 2022 ICML Test of Time Award and the 2021 Best Paper Award and Pattern Recognition Medal from Elsevier Pattern Recognition. He has managed more than 10 research projects, including national and EU-funded projects, totaling more than 1.5M€ in the last 5 years. He regularly serves as Area Chair for top-tier conferences in machine learning and computer security like NeurIPS and the IEEE Symposium on Security and Privacy. He is an Associate Editor-in-Chief of Pattern Recognition, and chaired IAPR TC1 (2016-2020). He is Fellow of IEEE and AAIA, Senior Member of ACM, and member of IAPR and ELLIS.

Borja Balle (DeepMind)

- Title: Towards Privacy-Aware AI agents

- Abstract: In this talk I will provide an introduction to *agentic privacy*, a research area concerned with building AI agents that can handle user data in a privacy-conscious way. Throughout the talk I will discuss recent agentic privacy works from our team exploring questions like: how do we formalize agentic privacy goals in LLM-based agents, how do we protect such agents from adversarial attacks, and how do we create benchmarks to measure agentic privacy performance. I will also provide a list of open challenges in this space.

- Bio: Borja Balle is a Staff Research Scientist at Google DeepMind. His current research interests include privacy-conscious AI agents, differentially private training for large-scale models, privacy auditing to identify implementation bugs in differentially private mechanisms, distributed differential privacy mechanisms, and the semantics of differential privacy. Before joining DeepMind in 2019, Borja was Applied Scientist at Amazon Research Cambridge, Lecturer in Data Science at Lancaster University, and post-doctoral fellow at McGill University. Borja served as workshops chair for NeurIPS 2015, and has co-organized multiple international workshops on privacy in machine learning (NeurIPS 2021, 2020, 2019, CCS 2019, ICML 2018, DALI 2017, NeurIPS 2016) and spectral learning techniques (ICML 2014, NeurIPS 2013, ICML 2013). His research has been recognized with several awards, including distinguished paper awards at USENIX 2023 and CCS 2023.

Ilia Shumailov (DeepMind)

- Title: Beyond

model.generate(): can I even tell what is going on and why it matters

- Abstract:

model.generate() is magic, it works! But why does it work and what is actually hiding behind this simple functional interface? This talk moves beyond model performance to inspect its untrusted origins. We'll explore how today's ML pipelines—built on layers of pre-trained models and third-party dependencies—are a fertile ground for problems.

Jamie Hayes (Google Deepmind)

- Title: Improving Gemini's Robustness to Indirect Prompt Injections

Jenia Jitsev (LAION)

- Title: Open Foundation Models - Scaling Laws and Generalization

- Abstract: Obtaining models that generalize well and show transfer across various tasks and conditions following generalist pre-training is one of the most important recent breakthroughs in machine learning. Such foundation models exhibit scaling laws, showing generalization improvement with increasing pre-training model, data and compute scales. Derivation of scaling laws emerged as important approach to predict various model properties and functions, including generalization and transfer, at larger scales from experiments executed on small scales. Scalins laws can also be used to perform systematic comparison of resulting models and learning procedures. We show how such derivation can be conducted to provide accurate prediction and comparison of capabilities across scales on example of open language-vision foundation models and datasets. We discuss the importance of open foundation models that ensure full reproducibility of their whole research pipeline - data, training, evaluation - for scaling law studies. Further, measuring generalization is crucial part of establishing scaling laws, and we show that this task is far from being solved. We highlight failures of standardized benchmarks to detect severe deficits in generalization existing in current frontier models and propose new measurement tools based on simple problems and their controlled variations, aiming for creation of corresponding benchmarks that can provide proper assessment of model generalization, able to detect its breakdown.

Joaquin Vanschoren (TU Eindhoven)

- Title: Safety Benchmarks for General-Purpose AI Models

Mathias Lecuyer (University of British Columbia)

- Title: Adversarial Robustness and Privacy-Measurements Using Hypothesis-Tests

- Abstract: ML theory usually considers model behaviour in expectation. In practical AI deployments however, we often expect models to be robust to adversarial perturbations, in which a user applies deliberate changes to on input to influence the prediction a target model. For instance, such attacks have been used to jailbreak aligned foundation models out of their normal behaviour. Given the complex models that we now deploy, how can we enforce such robustness properties while keeping model flexibility and utility?I will present recent work on Adaptive Randomized Smoothing (ARS), an approach we developed to certify the predictions of test-time adaptive models against adversarial examples. ARS extends the analysis of randomized smoothing using f-Differential Privacy, to certify the adaptive composition of multiple steps during model prediction. We show how to instantiate ARS on deep image classification to certify predictions against adversarial examples of bounded L∞ norm. If time permits, I will also connect f-Differential Privacy's hypothesis testing view of privacy to the audit of data leakage in large AI models. Specifically, I will discuss a new data leakage measurement technique we developed, that does not require access to in-distribution non-member data. This is particularly important in the age of foundation models, often trained on all available data at a given time. It is also related to recent efforts in detecting data use in large AI models, a timely question at the intersection of AI and intellectual property.

- Mathias Lécuyer is an Assistant Professor at the University of British Columbia. Before that, he was a postdoctoral researcher at Microsoft Research, New York. He received his PhD from Columbia University. Mathias works on trustworthy AI, on topics ranging from privacy, robustness, explainability, and causality, with a specific focus on applications that provide rigorous guarantees. Recent impactful contributions include the first scalable defence against adversarial examples with provable guarantees (now called randomized smoothing), as well as system support for differential privacy accounting

Matthew Jagielski (Google Deepmind)

Om Thakkar (OpenAI)

- Title: Privacy-Leakage in Speech Models: Attacks and Mitigations

- Abstract: Recent research has highlighted the vulnerability of neural networks to unintended memorization of training examples, raising significant privacy concerns. In this talk, we first explore two primary types of privacy leakage: extraction attacks and memorization audits. Specifically, we examine novel extraction attacks targeting speech models and discuss efficient methodologies for auditing memorization. In the second half of the talk, we will present empirical privacy approaches that enable training state-of-the-art speech models while effectively reducing memorization risks.

CISPA Speakers

Mario Fritz

Franziska Boenisch

- Title: Understanding and Mitigating Memorization in Foundation Models

- Abstract: Memorization occurs when machine learning models store and reproduce specific training examples at inference time—a phenomenon that raises serious concerns for privacy and intellectual property. In this talk, we will explore what it means for modern ML models to memorize data, and why this behavior has become especially relevant in large foundation models. I will present concrete ways to define and measure memorization, show how it manifests in practice, and analyze which data is most vulnerable. We will examine both large self-supervised vision encoders and state-of-the-art diffusion models. For encoders, we identify the neurons responsible for memorization, revealing insights into internal model behavior and contrasting supervised with self-supervised training. For diffusion models, I will show how to localize memorization, prune responsible neurons, and reduce overfitting to the training data—helping to mitigate privacy and copyright risks while improving generation diversity.

More details will follow soon. We are publishing the program schedule during the month of April.

Please have a look at last year's Summer School on Usable Security , last year's Summer School on Privacy-Preserving CryptographySummer School 2023, Summer School 2022, or our Digital Summer School 2021 to get a general idea of the event.

If you have any questions or queries for any of our summer schools, our Summer-School team will be glad to help via summer-school@cispa.de.

Please note that we are always publishing speakers and topics/titles on our website, as soon as they are confirmed. Please refrain from requesting titles and detailed topics etc. via e-mail. If you want to wait with your application until the detailed program is finished, that is perfectly fine. We just want to give interested students this opportunity to register early and secure their spot ahead of time.

Frequently Asked Questions (Summer School FAQ)

1. Can I attend several Summer Schools?

Yes, if you attended one of our Summer Schools, of course you are welcome to apply for any of the following years. If there are two Summer Schools in one year, you can apply for both Summer Schools. It is advisable to mention a specific research focus in your application that aligns with your interests and your prior education.

2. What criteria are considered in the selection process of participants?

Our jury selects the participants of the Summer Schools. We have strict criteria for selecting participants for our Summer School due to the high number of applications compared to the limited available spots. We give priority to those currently studying or with a background in the Summer School's topic. A foundational knowledge in the field is required, and participants should have reached a certain academic level. For instance, individuals in the early stages of a Bachelor's degree may not qualify. This is important because the Summer School is interactive, and having prior knowledge is crucial for active participation.

3. How does the travel grant work?

You can apply for a travel grant during your application if your travel costs are not reimbursed by your employer. Our jury will select participants and approve the travel grant within availability. You will only receive reimbursement after successful completion of the Summer School which means actively attending every day of the event. Another requirement for reimbursement is that you actively participate in the Summer School by presenting a poster during the poster session. There might be additional requirements for a specific Summer School which will be mentioned on the event’s website. If your travel grant was approved you need to hand in receipts after completion of the Summer School for all expenses in connection with your travels. The participation fee secures your spot for the event and covers catering, drinks, course materials and the full program. Thus it cannot be refunded via the travel grant. Only receipts dated within one day before the start of the event until the last day are eligible for reimbursement. Exceptions require prior approval via email. Please book accommodations from our recommendation list when possible or contact us for approval prior your booking.

4. What do I have to consider for the format of my poster presentation?

Your poster should be in portrait format, A0 size, please bring your printed poster with you. If you don’t have the possibility to print it yourself, send it as a PDFX file via email to us within the deadline mentioned being accepted to our event. Each presentation will be limited to 5 minutes, followed by a short discussion.

5. What if I have certain dietary requirements?

If you have specific dietary restrictions, please include that information in your application. We strive to accommodate all dietary needs to the best of our ability. Additionally, rest assured that there is always a vegetarian option available for individuals with restrictions concerning meat.

6. What do I have to bring?

Bring your own laptop. Course materials and catering during the event will be provided and is included in the fee. You will receive handouts and presentations after the event of every speaker who agreed to distribute them.

7. Do I get a certificate of participation?

Yes, a certificate will be provided if you successfully attend all days of the Summer School.

8. What if I bring a person who does not participate and we share a room?

We can only reimburse costs for participants. If you bring your partner or a friend to share your hotel room, please provide receipts and comparison prices for the expenses that would have occurred if you traveled alone, and we will reimburse accordingly.

9. What if I want to share a room with another Summer School participant?

Both individuals should ensure the hotel receipt includes both names. Submit this receipt, and each of you will receive half the reimbursement for the hotel room cost.

10. What if I have to cancel my participation?

If you cancel at short notice after confirming and paying the participation fee, refunds cannot be issued. You can, however, name a substitute participant with similar qualifications. Refunds are contingent on having enough time to rearrange event planning (e.g. transport and catering).

If you want to be informed about scientific events and regular summer schools, please register to our newsletter.